Joint Probability Density Function or Likelihood Function:

$f(x_1, x_2, …, x_n|\theta) = f(x_1|\theta) f(x_2|\theta) … f(x_N| \theta) $

$f(x_1, x_2, …, x_n|\theta) = \prod_{i=2}^n f(X_i | \theta) =L(\theta)$

A likelihood function is the density function regarded as a function of \theta.

$ L(\theta |x) = \prod f(x|\theta) $, $\theta \in \Theta$

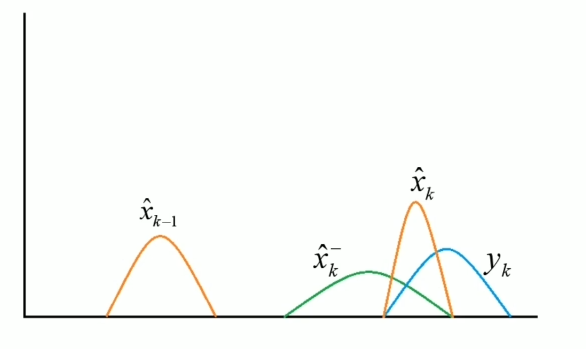

As we know the sample, we need to ‘guess’ the parameter \theta to let the joint prob distribution have a maximum probability.

The Maximum Likelihood Estimator (MLE) is :

$ \hat{\theta}(x) = arg\max_{\theta} L(\theta|x) $

To simplify the calculation, we normally apply a logarithm transformation,

$$ l(\theta)= log\ L $$

$$log L(\theta |x) =log \prod f(x|\theta)= \sum_{i=1}^N log\ f_{\theta} (y_i) $$

As the logarithm transformation is monotonous,

$$ \hat{\theta}(x) = arg\max_{\theta} L(\theta|x) \Leftrightarrow $$

$$\hat{\theta}(x) = arg\max_{\theta} l(\theta|x) $$

To review it, we aim to estimate the parameters. The logic of the MLE estimator is that we guess a probability distribution, fitting into our data (sample). Then, we find or guess the value of parameters such that the joint probability function (likelihood function) achieves the maximum value.

In another word, we guess the probability dist and parameters to make sample data achieve highest possibility.

To find the argmax_{\theta} l(\theta) is equivalent to argmin_{\theta} -l(\theta)

A Normal Distribution Example

A normal distribution example,for random sample x_i, i=1, 2, 3, …, N

$$f_{\theta}(x) = \frac{1}{\sqrt{2\pi \sigma^2}}e^{-\frac{(x-\mu)^2}{2\sigma^2}} $$

We substitute f_{\theta}(x) into the log-likelihood distribution, then we find,

$$ l(\mu, \sigma^2)=log\ L(\mu,\sigma^2) $$

$$= -\frac{n}{2}\big( log2\pi + log\sigma^2 \big) -\frac{1}{2\sigma^2}\sum_{i=1}^n (x_i -\mu)^2 $$

Taking the First Order Conditions, F.O.C.

By setting \frac{\partial l}{\partial \mu}= 0 and \frac{\partial l}{\partial \sigma^2}= 0, we solve \mu_{MLE} and \sigma^2_{MLE}.

$$ \mu_{MLE} =\bar{x}$$

$$\sigma^2_{MLE} =\frac{1}{n} \sum_{i=1}^n (x_i – \bar{x})$$

P.S. We can prove that there are the local maximum, coz the second partial derivatives are negative.

def MLE_norm(x):

mu_hat = np.mean(x)

sigma2_hat = np.var(x)

return mu_hat, sigma2_hat

#----------

mu = 5

sigma = 2.5

N = 10000

np.random.seed(0)

x = np.random.normal( mu, sigma, size=(N,) )

#----------

mu_hat, sigma2_hat = MLE_norm(x)

for_mu_hat = '\hat{\mu} = '+format(round(mu_hat,2))+''

for_sigma2_hat = '\hat{\sigma} = '+format(round(np.sqrt(sigma2_hat),2))+''

print('The MLE for data is:')

display(Latex(for_mu_hat))

display(Latex(for_sigma2_hat))By our calculation, we know that mathematically the MLE estimator of mu and sigma are the mean and variance.

Perform MLE Numerically

If the log-likelihood function wasn’t continuous or differentiable. Can solve numerically through an optimisation problem where the objective function is …

In other words, sometimes we cannot calculate the MLE estimator by hand, because the log-likelihood function might not be differentiable. What can we do?

We apply the computer to interactively ‘guess’ values (Optimizer) that can end up with the maximum log-likelihood (or a minimum of negative log-likelihood).

The maximum likelihood estimator (MLE):

$$\hat{\theta}(x) = arg max_{\theta} L(\theta|x)$$

$$\hat{\theta}(x) = arg max_{\theta} L(\theta|x)$$

$$L(\theta) = \prod_{i=1}^N f(X_i | \theta) $$

$$ log\ f(X|\theta)=\sum_{i=1}^N log\ f(x_i|\theta)$$

$$ arg\max_{\theta} l(\theta) \Leftrightarrow arg\min_{\theta} -l(\theta) $$

Why we use the minimizer instead? There is only minimizer available in the scipy package, and those mini&max are basically equivalent.

def log_likelihood(theta, x):

mu = theta[0]

sigma = theta[1]

l_theta = np.sum( np.log( sc.stats.norm.pdf(x, loc=mu, scale=sigma) ) )

# there is a negative sign in front. We minimise it later.

return -l_theta

# Constraint Function that Restrict sigma to be positive.

def sigma_pos(theta):

sigma = np.abs(theta[1])

return sigma

#--------

cons_set = {'type':'ineq', 'fun': sigma_pos}

theta0 = [2,3] # inital guess. there could be any random numbers, coz it is just the intital value inputed into the optimiser

# find the minimum value of the log likelihood function

opt = sc.optimize.minimize(fun=log_likelihood, x0=theta0, args=(x,), constraints=cons_set)

for_mu_hat = '\hat{\mu} = '+format(round(opt.x[0],2))+''

for_sigma2_hat = '\hat{\sigma} = '+format(round(opt.x[1],2))+''

print('The MLE for data is:')

display(Latex(for_mu_hat))

display(Latex(for_sigma2_hat))